Artificial intelligence isn’t just transforming how we build and operate—it’s also redefining how we store, manage, and move data.

As AI adoption accelerates, many organizations are discovering that their existing cloud storage strategies—designed for more predictable, human-driven workloads—are buckling under the pressure. Storage costs are rising fast. Architectures are becoming brittle. Governance is falling behind.

Welcome to the AI Data Tsunami.

In this post, we’ll break down:

- What’s fueling this surge in data volume and complexity

- The top five ways AI disrupts traditional storage models

- How software-defined cloud storage can help you weather the storm

The Root Cause: AI Generates, Consumes, and Replicates Massive Data Sets

AI systems don’t just need a lot of data—they create even more:

- Raw data ingested for training and fine-tuning

- Feature-engineered datasets and embeddings

- Model checkpoints, logs, and artifacts

- Inference results and feedback loops

- Clones of datasets for experimentation, compliance, and backup

This data isn’t static—it’s high-volume, high-velocity, and high-variability. The result? A flood of files and objects that most legacy cloud storage architectures simply weren’t built to handle.

Five Ways AI Breaks Your Cloud Storage Strategy

Let’s look at the source of the Tsunami—and why it’s not just about “buying more space.”

1. The AI Data Explosion

AI workloads scale fast—especially in early experimentation. Teams create multiple versions of datasets and models, each slightly tweaked or tuned. Dev/test/staging environments are often isolated copies. Add auto-logging, continuous training, and versioning, and storage usage can grow 5–10× in months.

Risk: Exploding storage costs with no visibility or cleanup plans.

2. Unpredictable Access Patterns and Costly Tiering Mismatches

Traditional cloud storage strategies rely on tiering: cold, warm, and hot. But AI workloads access data nonlinearly—pulling archived files mid-training, or hammering metadata for inference.

This leads to:

- Frequent retrieval fees from cold storage

- Unexpected latency bottlenecks

- Teams overcompensating by storing everything in the highest tier

Risk: Performance issues and runaway costs from poorly optimized access.

3. Pipeline Bloat and Silent Duplication

From data preprocessing scripts to model training frameworks, AI pipelines leave behind:

- Temporary artifacts

- Intermediate datasets

- Log files and checkpoint snapshots

- Slightly different versions of the same data

These files are often orphaned—yet persist across backup cycles and storage snapshots.

Risk: You’re backing up and replicating garbage without knowing it.

4. Backup, Replication, and Egress Blowouts

AI teams want fast, global access to datasets and models. Therefore, IT teams enable:

- Multi-region replication

- Cross-cloud backups

- Model sharing via APIs and data lakes

But this creates data duplication at scale, leading to:

- Massive egress fees

- Redundant storage charges

- Longer recovery windows and backup times

Risk: Resiliency measures that triple your cloud spend.

5. Shadow AI = Shadow Storage

As teams move fast with AI experiments, storage becomes increasingly fragmented and stealth:

- New buckets or volumes spun up without governance

- Sensitive data uploaded without compliance reviews

- Legacy datasets left in high-cost tiers “just in case”

Without centralized control, these shadow environments evade IT governance, budgeting, and security policies.

Risk: Loss of control, increased risk, and cloud billing surprises.

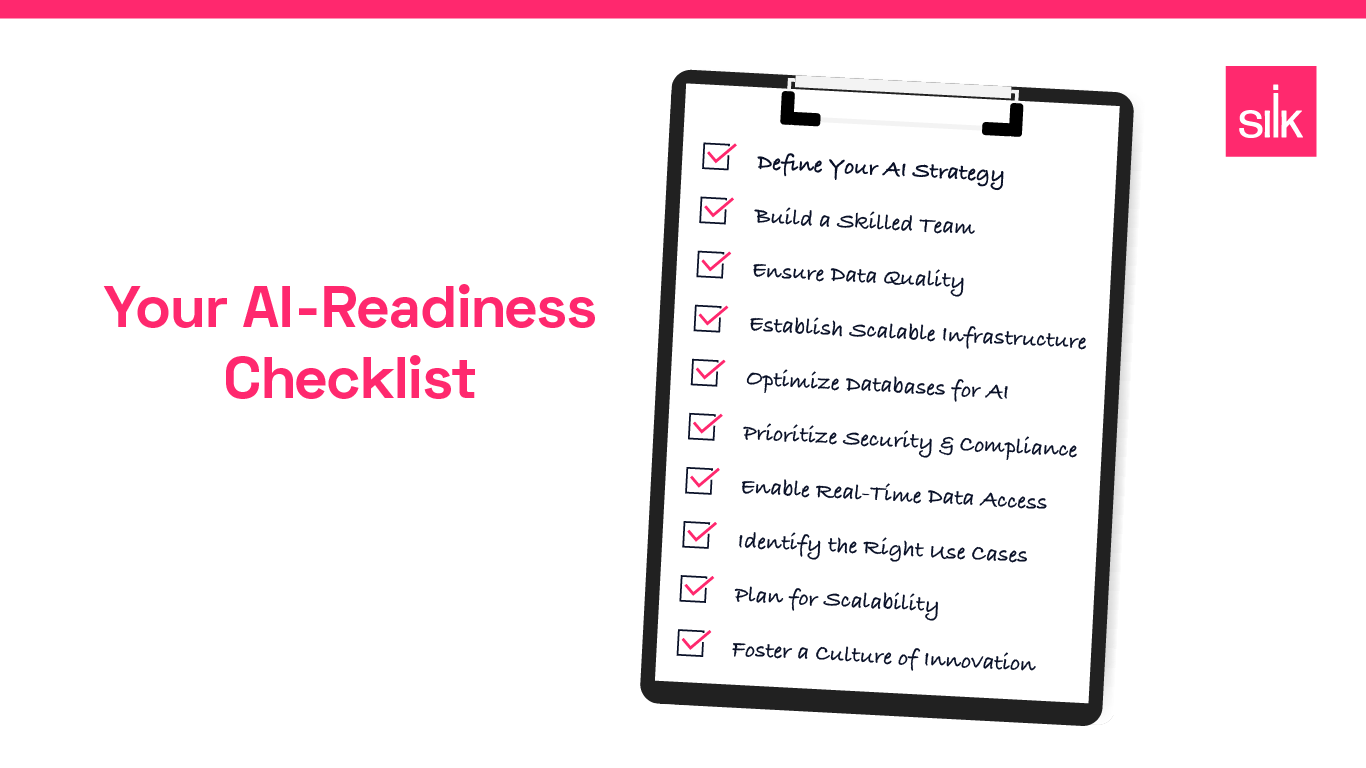

The Smart Response: Software‑Defined Cloud Storage (SDCS)

You can’t stop the AI data surge—but you can outsmart it.

Software-defined cloud storage is a modern architectural approach that decouples your storage control plane from the underlying hardware or cloud provider. It gives you a programmable, policy-driven way to manage data across environments, providers, and access patterns.

Here’s how SDCS tackles the five challenges above:

| AI Challenge | SDCS Advantage |

|---|---|

| Data Explosion | Global deduplication, compression, and usage analytics reduce physical storage and eliminate waste. |

| Tiering Mismatches | Intelligent, real-time tiering moves data to the optimal storage class based on actual usage – not static rules. |

| Pipeline Bloat | Automated cleanup policies and version-aware metadata tracking eliminate redundant and temporary data. |

| Replication Overload | Vendor-neutral replication and erasure coding let you meet RPO/RTO goals without multiplying costs. |

| Shadow Storage | Central dashboards and policy engines give IT visibility and control – even for self-service AI teams. |

SDCS provides:

- Real-Time Data: substantially faster than cloud native storage feeding data to AI in real-time

- Efficient: reduction in cloud resources needed to support demanding workloads

- Copy Data Management: Zero-footprint, zero-cost clones, enable infinite copies of data for all stakeholders

- Vendor independence: avoid lock-in and optimize cost-performance ratios

Final Thoughts: It’s Not Just About Storage Anymore

AI has turned data from a passive asset into an active, volatile menacing force. If you’re not evolving your storage architecture, you will never be ready for AI at scale.

Software-defined cloud storage is the foundation for:

- Scalable AI experimentation

- Cost-efficient model deployment

- Accurate and timely AI-Driven answers

It helps you ride the AI wave—not drown in it.

Ready to Rein in Your AI Storage Chaos?

The AI tsunami is here—let’s make sure your data has a software-defined cloud storage surfboard to ride the wave.

Let's Talk