Real-Time AI Inferencing — On Live Production Data

Silk enables real-time AI inferencing – without destabilizing production systems or driving up cloud costs.

Request a DemoReal-Time AI Breaks at the Data Layer

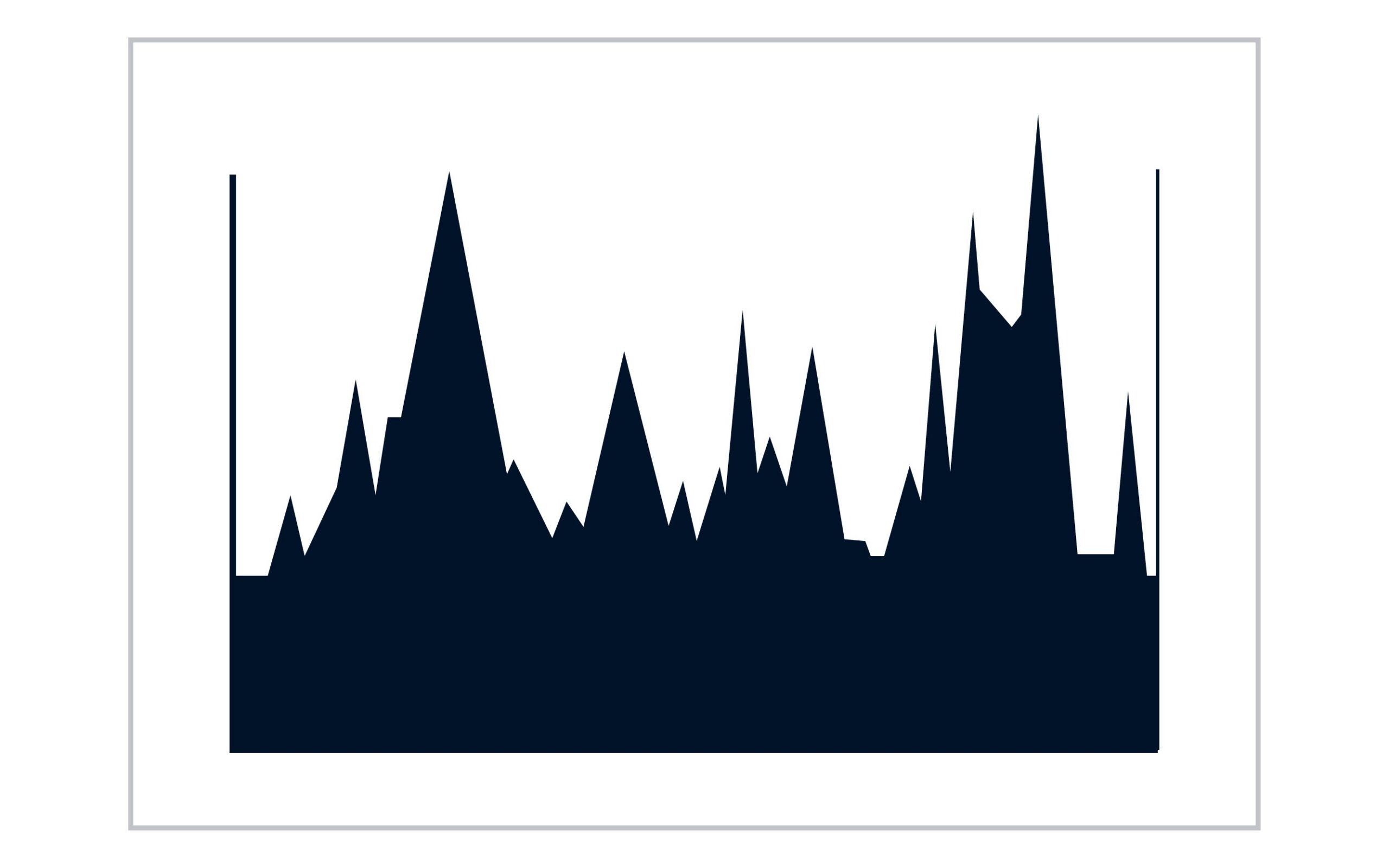

AI inferencing introduces bursty, non-human access patterns – often equivalent to thousands of concurrent users – against the same mission-critical databases that run the business. Traditional infrastructure can’t absorb these spikes without introducing latency, risk, or runaway overprovisioning.

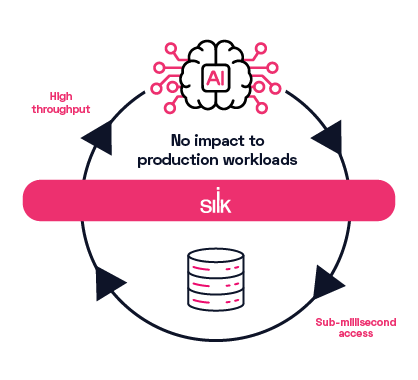

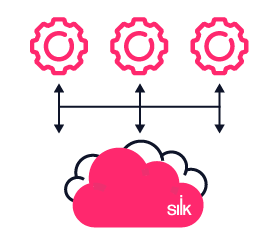

Silk eliminates the root cause: performance tied to capacity and static configuration. As a software-defined SAN and cloud acceleration layer, Silk delivers an unlimited data layer beneath applications so AI, analytics, and transactional workloads can run against the same live production data – each receiving the performance they need automatically.

Real-Time AI, Without Disruption

Live-Context Inferencing on Production Data

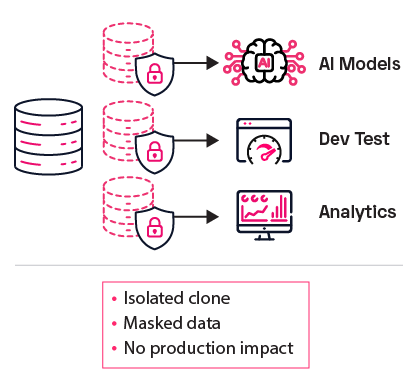

Silk enables AI models to run directly against authoritative, real-time enterprise data. Instead of relying on delayed replicas or stale pipelines, inferencing stays grounded in live production context — improving accuracy and business relevance.

Predictable Latency Under Inference Spikes

AI workloads generate bursty, unpredictable demand that can overwhelm traditional storage. Silk keeps latency stable even under heavy inference spikes, so real-time AI performance remains consistent as usage scales.

Before Silk

With Silk

No Noisy Neighbors Between AI and OLTP

Inferencing, analytics, and transactional systems all compete for the same data layer. Silk dynamically governs performance across workloads with conflicting access patterns, eliminating contention and ensuring production applications stay protected.

Before Silk

With Silk

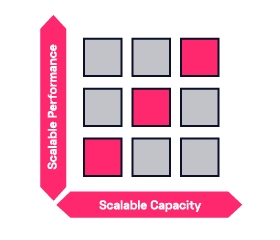

Scale AI Without Overprovisioning

Most organizations oversize infrastructure just to absorb peak inference demand. Silk decouples performance from capacity, allowing AI deployments to scale efficiently without driving up compute and storage costs.

Before Silk

With Silk

Enterprise AI That’s Production-Safe by Design

Real-time inferencing shouldn’t come at the expense of stability. Silk makes AI workloads safe to run alongside systems of record by delivering predictable performance and controlled access at the data layer.

Sentara's AI Initiative Is Enabled By Silk's Faster Performance and Reduced Cloud Costs

Make Live-Context AI Practical — At Enterprise Scale

Real-time AI inferencing doesn’t fail because of models.

It fails when the data layer becomes the bottleneck.

Silk makes live-context AI an architectural outcome—not a science experiment.

Request a Demo